How many red Christmas baubles on the tree?

Posté le Sat 05 January 2019 dans machine learning • 6 min de lecture

Cet article est le numéro 4 de la série "clustering" :

- Generate datasets to understand some clustering algorithms behavior

- L'algorithme des k-moyennes n'est pas la panacée

- Modèles de mélanges gaussiens : k-moyennes sous stéroïdes

- How many red Christmas baubles on the tree?

- Chaining effect in clustering

- Animate intermediate results of your algorithm

- Accuracy: de la classification supervisée à non supervisée (clustering)

Christmas time is over. It is time to remove the Cristmas tree. But just before removing it, one can ask: How many red Christmas baubles are on the tree?

In order to answer this question, we will proceed with the following steps:

- Transform the picture into a dataframe, which is more convenient to handle.

- Filter the red points from the others.

- Group the red points into homogeneous clusters (in regard to the coordinates).

Transform a picture into "tidy" data

An image is composed by a list of pixels, each having two coordinates and a color value. It can thus be encoded by a matrix where each entry corresponds to a pixel of the image, the entry being the color of the pixel, and the row and column indexes are the coordinates of the pixel. For a grayscale image, the entries of the matrix correspond to the amount of light. For color images, each color is often encoded by red, green and blue values: these three primary colors are added to create the desired color. Therefore, since for each pixel we have three color values (red, green and blue), it is practical to encode an image in a 3d array: one layer of the array is a matrix of red values, and the two other layers are for green and blue.

Let’s transform the image of Christmas tree (download the image here) into a 3d array with the jpeg R library:

library("jpeg")

filename = "tree.jpg"

a = readJPEG(filename)

Handling such a 3d array is not always handy, we often prefer to have a dataframe in a "tidy" format:

- Each column is a variable.

- Each row is an observation.

We can do this transformation with the following R code:

library("dplyr")

getLayer <- function(a, n) {

layer = a[, , n]

layer = as.data.frame(as.table(layer))

colnames(layer) <- c("x", "y", paste0("layer", n))

layer

}

layer1 = getLayer(a, 1)

layer2 = getLayer(a, 2) %>% select(layer2)

layer3 = getLayer(a, 3) %>% select(layer3)

df = layer1 %>% bind_cols(layer2, layer3)

Here, we defined a function getLayer which takes a 3d array a, and the number of the layer we want, n, and outputs a three column dataframe: the columns x and y for the two coordinates, and a column for the values of that layer.

We applied these function three times to get one dataframe per layer. To combine them into one dataframe, bind_cols is used which is way more efficient than joining them.

Filter red points

To filter red points, we consider that they should have more than 50 percent of red (the red Christmas baubles are rather bright, hence a high value of red light). But this rule is not enough: yellow is also composed of red light, and green light. So we add a condition to have at least twice as much red light as green light. The following code do this filtering:

df = df %>% mutate(tmp = case_when(

(layer1 > 0.5) & (layer1 > 2* layer2) ~ 0,

TRUE ~ 1

)) %>% transmute(x=x, y=y, layer1=tmp, layer2=tmp, layer3=tmp)

Here, we transformed our dataframe to encode red points into black ones (value 0 on each layer) and the others in white (value 1, i.e. 100%, on each layer).

Check our red filtering

In order to check that our filtering works, we can convert the filtered dataframe into a 3d array:

library("abind")

library("tidyr")

createMatrixLayer <- function(df, n) {

layerName = paste0("layer", n)

d = df %>% select(x, y, layerName)

d = spread(d, y, layerName) %>% select(-x)

d

}

layer1 = createMatrixLayer(df, 1)

layer2 = createMatrixLayer(df, 2)

layer3 = createMatrixLayer(df, 3)

b = abind(layer1, layer2, layer3, along = 3)

writeJPEG(b, "redChristmasBaubles.jpg")

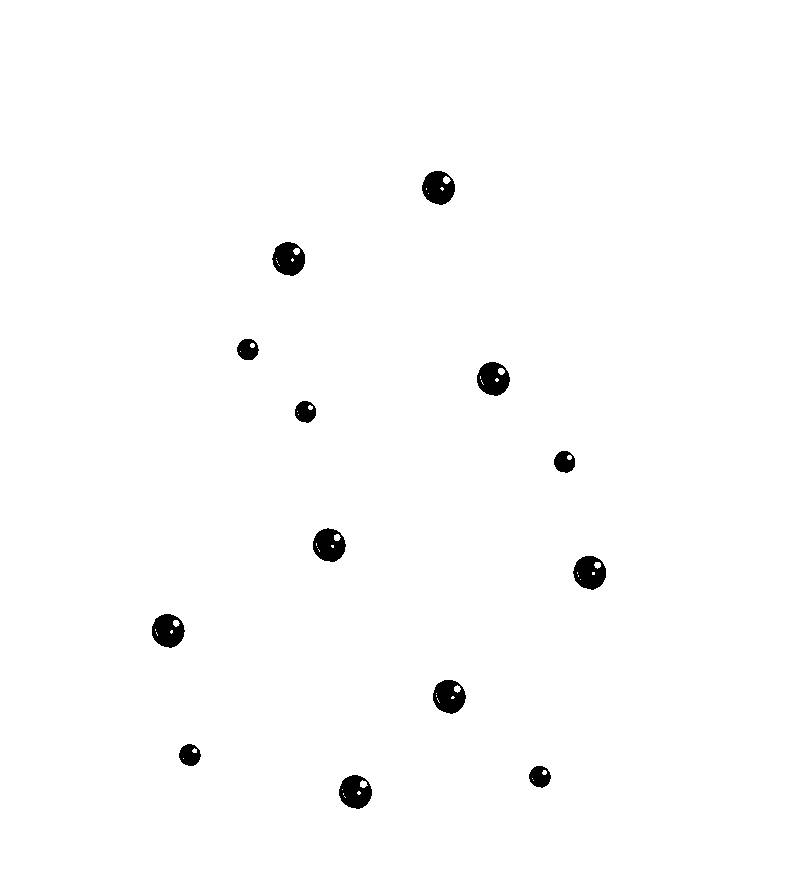

The generated image is:

Leverage the k-means criterion to count the number of red Christmas baubles

We now have a set of black points and one of whites. To count the number of Christmas baubles, we have to separate the black points into clusters, each corresponding to a ball. It appears that the balls are of somewhat equal size on the image, having therefore about the same number of points: we seek clusters of equal proportions. We can roughly consider that the balls are generated by a mixture of Gaussians with each zero covariance (balls are round) and of equal variance (balls are of same radius). This matches the k-means assumptions.

Let’s see how one can find the best number of clusters to set for the k-means algorithm.

Decrease of the criterion along with the number of clusters

K-means aims to minimize the total sum of the squared distances between the center of the cluster and the points of the cluster:

where:

- \(K\) is the number of clusters;

- \(x_i , i \in [1, N]\) is a set of \(N\) vectors;

- \(z_{ik} \in {0, 1}\) indicates if the vector \(x_i\) belongs to the cluster \(k\);

- \(\mu_k \in \mathbb{R}^p\), the center of the cluster \(k\).

When \(K = N\) each cluster is composed of only one point, so \(xxx = 0\). Let’s now consider \(K < N\). There exists at least one cluster with at least two points. Without loss of generality, let’s assume this cluster is the \(K^{th}\) cluster. With \(a\) a point of the cluster \(K\), we can write the minimal value of the criterion for \(K\) clusters as follows:

If we put \(a\) in its own new cluster \(K+1\), \(x_a = \mu_{K+1}\), so we have:

and therefore, we found one assignation such that:

The objective of k-means is therefore decreasing with the number of clusters.

From local minima to a global minimum

Lets apply k-means on the data, taking the coordinates of the red points as features. We apply k-means with several number of clusters (from 2 to 100), and plot the criterion found for each number of clusters:

library("ggplot2")

df = df %>%

filter(layer1==0) %>%

mutate(x=as.numeric(x), y=as.numeric(y)) %>%

select(x, y)

l = c()

for (k in seq(2, 100, 1)) {

km = kmeans(df, k)

l = c(l, km$tot.withinss)

}

g = data.frame(x = seq(2, 100), y = l) %>%

ggplot(aes(x, y)) +

geom_line(color='steelblue') +

geom_point(color='steelblue') +

labs(x = "Number of clusters",

y = "Total within sum of square")

plot(g)

We can see that the criterion is not always decreasing. This is because k-means finds a local minimum of the criterion. The result depends on the initialization of the algorithm, which is random. We can run the algorithm sevaral times (here 200) for a given cluster number, and retain the result minimizing the criterion:

l = c()

for (k in seq(2, 100, 1)) {

km = kmeans(df, k, iter.max = 20, nstart=200)

l = c(l, km$tot.withinss)

}

g = data.frame(x = seq(2, 100), y = l) %>%

ggplot(aes(x, y)) +

geom_line(color='steelblue') +

geom_point(color='steelblue') +

labs(x = "Number of clusters",

y = "Total within sum of square")

plot(g)

One can see an elbow on the curve which indicates a good number of clusters.

Elbow method

The elbow method consists in plotting the values of the criterion found by k-means when running over an increasing number of clusters. If one can see an elbow, it means that the criterion is decreasing faster before the elbow than after it:

- Before the elbow, augmenting the number of clusters translates to an important drop of the value of the criterion, thus a higher number of clusters is meaningful, i.e. the points are much more concentrated around the centroids of the clusters;

- After the elbow, the drop in the value of the criterion is small, thus points are not much more concentrated around the centroids with an increase in the number of clusters.

Let's zoom in, and draw a vertical line where we observe an elbow:

g = data.frame(x = seq(2, 100), y = l) %>%

filter(x <= 20, x >= 7) %>%

ggplot(aes(x, y)) +

geom_line(color='steelblue') +

geom_point(color='steelblue') +

geom_vline(xintercept = 13, linetype="dashed") +

ggtitle("Number of red balls") +

labs(x = "Number of clusters",

y = "Total within sum of square")

plot(g)

One can see a clear elbow when the number of clusters is 13. It corresponds to the number of red Christmas baubles on the tree.