Trends in classification and clustering research fields

Posté le Sat 05 October 2019 dans Meeting • 6 min de lecture

From September 3rd to 5th, I was welcomed by LORIA and INIST to take part in the XXVI Meeting of the Société Francophone de Classification held in Nancy.

This conference was an opportunity to present my work on the clustering of textual content. If we can regularly read articles on classification, they often tend to linger over image processing. The summer news has shown us again with the "disclosure" during a Facebook breakdown of the automatic labeling of photos published on the social network. This event was therefore for me the opportunity to present my work on a ubiquitous source but less highlighted: the textual content.

It was also an opportunity to get a glimpse of recent methods applied to other areas and to exchange with other researchers on various issues that we may encounter in our data projects at Kernix.

I present here the different trends of this field of data science discussed during these meeting, and starting from the basic material that we handle on a daily basis, the data, classified according to their typologies.

Textual data

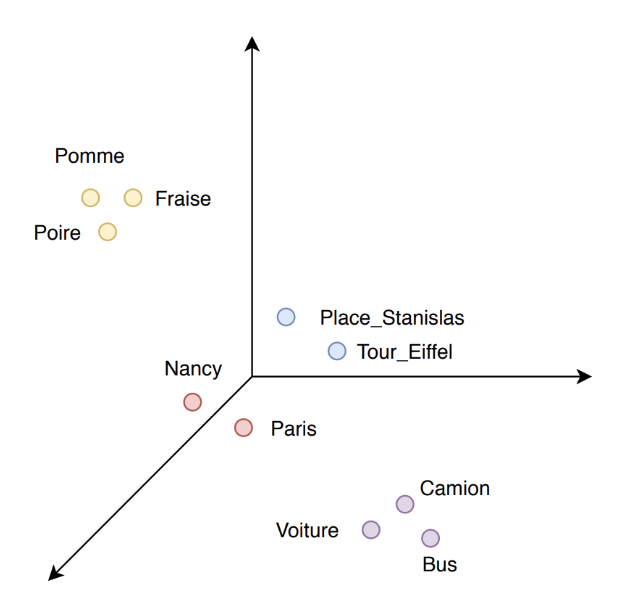

My contribution to this conference was on the classification of textual data via transfer learning methods. More precisely, my work consisted in the evaluation of methods for the substitution of word embeddings: the word embeddings make it possible to encode the semantics of the words in vectors (Figure 1).

Figure 1: word embeddings.

These vectors can significantly improve the classification of texts or be used as additional information for other purposes of natural language processing (automatic translation, named entities resolution, etc.).

However, for specialized data, some words are unknown to the word embeddings. This type of models is frequently trained on sources offering a large volume of content to cover a large semantic field but which has the disadvantage of using a common language that can extract the meaning of words (e.g. use of Wikipedia articles). If a model is trained with Wikipedia articles, it may have difficulty manipulating new content that uses a more technical lexicon. A common example is the field of health. It is likely that Wikipedia does not provide a broad set of biomolecule names.

To overcome these constraints, it is common to use alternative methods. A rigorous study of these substitution methods is therefore necessary and was therefore presented and discussed during these meeting.

This type of semantic information of words, encoded by vectors, has been used in the works of Mickael Febrissy et. al. for non-negative matrix factorization. This method enables clustering of articles.

Clustering is when no explicit example of association between a text and a category is given to the algorithm as an example of learning. The algorithm thus finds coherent groups of articles solely on the basis of their respective contents. The objective is to make emerge texts of the categories without the help of a previously established reading grid.

The use of this method can easily be considered by content producers or broadcasters. The algorithm is thus able to group articles according to their topics. Imagine a publication dedicated to sport, the algorithm would be able to classify the contents of ball sports in one category and motorsports in another.

An alternative to the vector representation of words for encoding semantic information is to use semantic databases. A demonstration was made by Jocelyn Poncelet who was able to apply it to the biomedical field. He highlighted the possibility of applying partitioning methods to biomedical data as well as consumer behavior data in supermarkets.

The portability of the method from one field and one type of data to another offers prospects for concrete application for the companies we support at Kernix.

Use case: Verbatim analysis

In a satisfaction survey, processing returns on open-ended questions can be complex, time-consuming and costly. The use of AI with an unsupervised algorithm, allows not only to emerge groupings without biasing the study but also to consider reusing the model without the need to retrain to adapt to the specificities of the input data.

Transactional data: towards more readability

Behavioral analysis is often derived from transactional data. It is a common practice in e-commerce to optimize the effectiveness of marketing tools such as emailing.

A common example of transactional data exploited comes from a consumer's shopping cart: when she validates an order on an e-commerce site or goes to the cash register in a shop, the purchase is historized to keep the information of the various products purchased together. The association between the customer and the basket is not retained in order not to keep personal data.

This type of data has been the subject of many presentations (see in particular the four presentations of Alexandre Bazin, Tatiana Makhalova, Alexandre Termier and Lamine Diop). The detailed content (articles associated with the presentations) is available in the proceedings of the conference.

These pattern mining methods yield initial throws of results that can be difficult to exploit by teams in different organizations (whether marketing or commercial) due to the abundance of emerging patterns and the level of additional information they carry.

Example: Among the products that are most often found in the basket of French, we find pasta and rice. This association alone provides little additional information.

An alternative method, or complementary, is to use biclustering algorithms: this junction between two research communities was made by the work dealing with the analysis of formal concepts by Nyoman Juniarta et. al. Several presentations focused on lattices, which are at the core of methods for extracting association rules.

Multidimensional Data: Exploiting information from different views

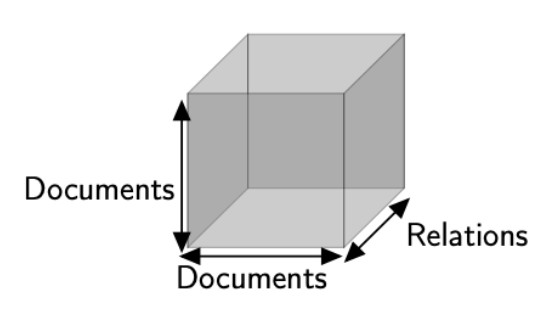

Multidimensional data is present in many fields: one can for example express different relations of a graph using a 3d tensor.

Example: This article contains a link to the home page, and is also listed on Twitter. Each line and each column represents a web page. At the intersection we can note the presence of a link (0 or 1). We can then multiply these types of tables by adding a layer of specific information (“is a link present on a social network”, “is on the same domain name”, etc.). These different arrays are then assembled to form a 3d tensor (Figure 2).

Figure 2: 3d tensor to represent different types of relations between articles (credits: Rafika Boutalbi).

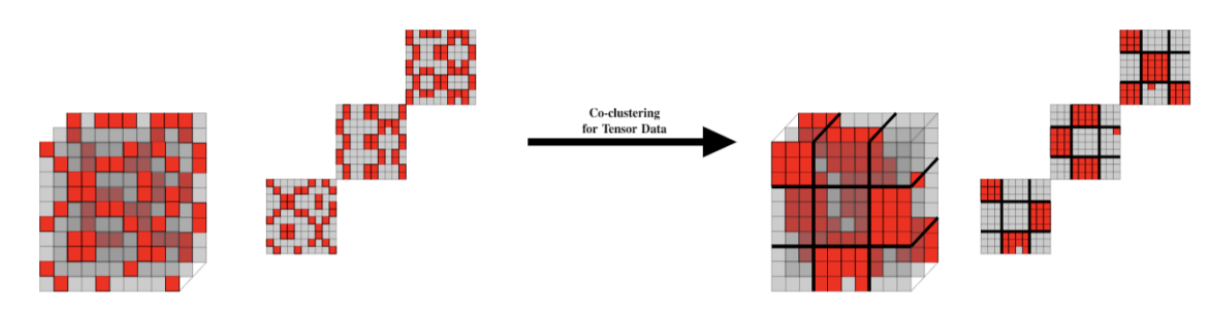

Rafika Boutalbi presented a co-clustering model, allowing to classify two dimensions simultaneously. This type of algorithm called co-clustering has for example been implemented in the Coclust package to which I contributed. Rafika Boutalbi's work is an extension in case several layers of information are available (Figure 3).

Figure 3: Two-dimensional co-clustering of a 3d tensor (credits: Rafika Boutalbi).

Multidimensional data partitioning does not necessarily imply partitioning across multiple dimensions. For example, Véronique Cariou's work focused on the clustering of variables on three-dimensional data. Ndeye Niang's work on multiblock data concerned the clustering of individuals that are described by variables structured in homogeneous blocks.

Time series: two main axes of research

Time series data were used in two works, distinguished mainly by the type of segmentation required.

Milad Leyli-abadi presented a method to detect changes common to a set of categorical sequences. In particular a study of water consumption data, collected thanks to the Linky smart meters, was conducted. The model incorporates weather and calendar information to explain changes in the consumption of the population. Another use case is for example the prediction of the attendance of a museum according to calendar and meteorological data. This work presents a segmentation of time periods. The work presented by Brieuc Conan-Guez focus on a different problem since this time it is to partition series according to their form. This makes it possible to group similar behaviors in the same class. A study comparing the proposed method with the more well known methods "DTW Barycenter Averaging" and "K-Spectral Centroid" was presented.

Learning and sharing

This second participation in the Rencontres de la Société Francophone de Classification was an opportunity for me to discuss various issues that we are confronted with during the realization of data science projects. I found some colleagues, with whom for example I realized a recommendation system of classified ads. These meetings are always enriching because they allow to keep abreast of the latest advances in research in the field of data science. They make it possible to anticipate some difficulties and to draw inspiration from solutions found in different fields of application.